Apple confirms CSAM detection only applies to photos, defends its method against other solutions<div class="feat-image">

</div>

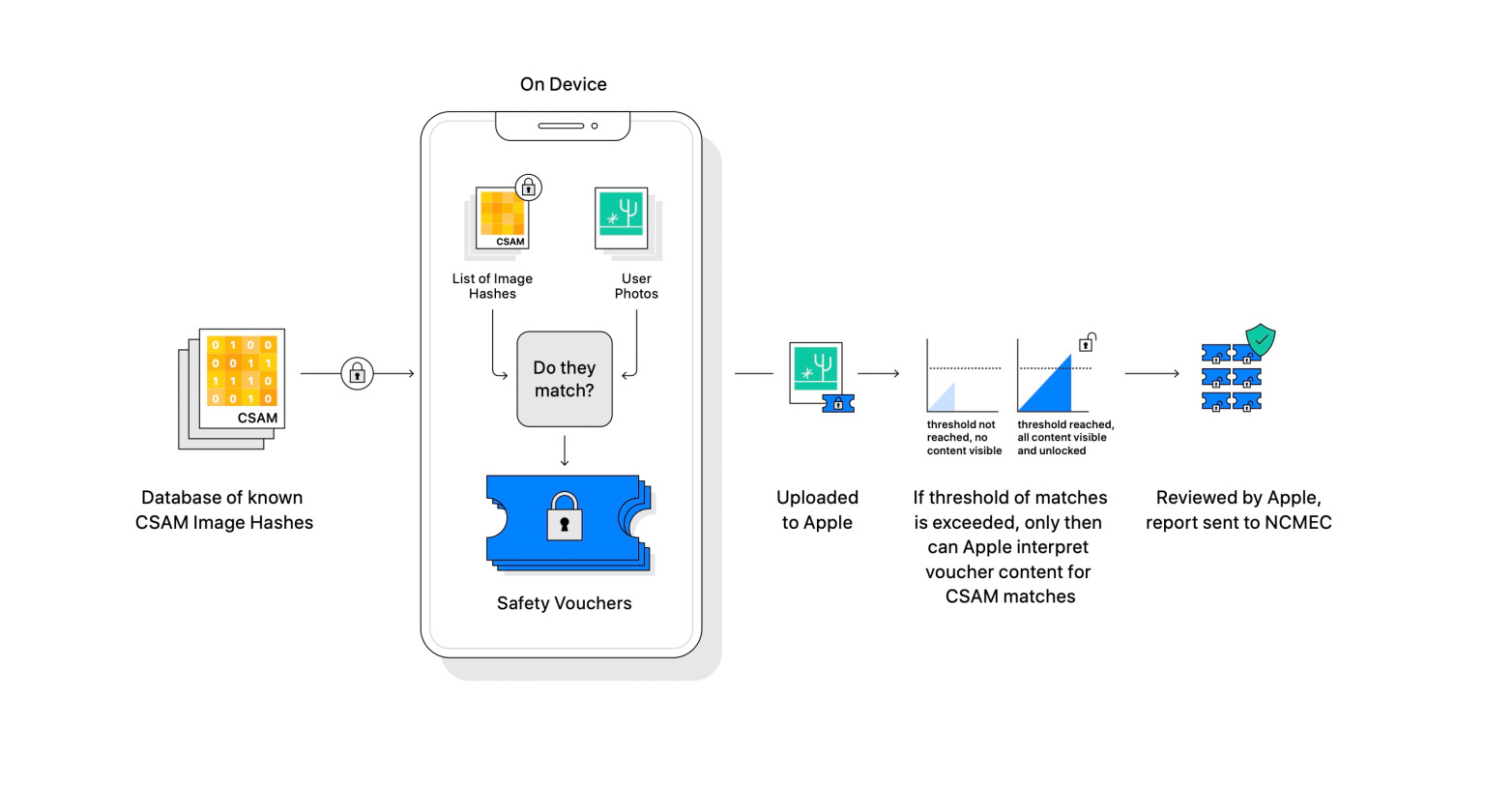

<p>Apple continues to offer clarity around the CSAM (child sexual abuse material) detection feature it announced last week. In addition to a

detailed frequently asked questions document published earlier today, Apple also now confirmed that CSAM detection only applies to photos stored in iCloud Photos, not videos. </p>

<p>The company also continues to defend its implementation of CSAM detection as more privacy-friendly and privacy-preserving than other companies.</p>

<p> <a href="

https://9to5mac.com/2021/08/09/apple-csam-detection-solution/#more-743343" class="more-link">more…[/url]</p>

<p>The post <a rel="nofollow" href="

https://9to5mac.com/2021/08/09/apple-csam-detection-solution/">Apple confirms CSAM detection only applies to photos, defends its method against other solutions[/url] appeared first on <a rel="nofollow" href="

https://9to5mac.com">9to5Mac[/url].</p><div class="feedflare">

<img src="[url]http://feeds.feedburner.com/~ff/9To5Mac-MacAllDay?i=c9HCh8E3yts:xNL9wPKyi0U:D7DqB2pKExk" border="0"></img>[/url]

</div><img src="

http://feeds.feedburner.com/~r/9To5Mac-MacAllDay/~4/c9HCh8E3yts" height="1" width="1" alt=""/>

Source:

Apple confirms CSAM detection only applies to photos, defends its method against other solutions